Landscape Research Report: Difference between revisions

(→Advertising and commerce: added figure from Roomle AR app) |

|||

| (57 intermediate revisions by 3 users not shown) | |||

| Line 2: | Line 2: | ||

This report provides a thorough analysis of the landscape of immersive interactive XR technologies carried out in the time period July 2019 until November 2020 by the members of the XR4ALL consortium. It is based on the expertise and contribution by a large number of researchers from Fraunhofer HHI, B<>com and Image & 3D Europe. For some sections, additional experts outside the consortium were invited to contribute. | This report provides a thorough analysis of the landscape of immersive interactive XR technologies carried out in the time period July 2019 until November 2020 by the members of the XR4ALL consortium. It is based on the expertise and contribution by a large number of researchers from Fraunhofer HHI, B<>com and Image & 3D Europe. For some sections, additional experts outside the consortium were invited to contribute. | ||

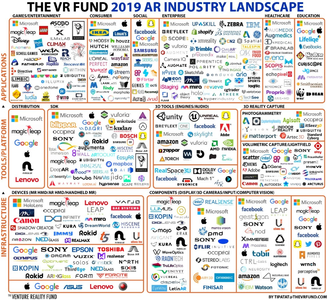

The document is organised as follows. In | The document is organised as follows. In the next section, the scope of eXtended Reality (XR) is defined setting clear definitions of fundamental terms in this domain. A detailed market analysis is presented in Sec. [[#XR market watch]]. It consists of the development and forecast of XR technologies based on an in-depth analysis of most recent surveys and reports from various market analysts and consulting firms. The major application domains are derived from these reports. Furthermore, the investments and expected shipment of devices are reported. Based on the latest analysis by the Venture Reality Fund, the main players and sectors in VR & AR are laid out. The Venture Reality fund is an investment company looking at technology domains ranging from artificial intelligence, augmented reality, to virtual reality to power the future of computing. A complete overview of international, European and regional associations in XR and a most recent patent overview concludes this section. | ||

In | In section [[#XR technologies]], a complete and detailed overview is given on all the relevant technologies that are necessary for the successful development of future immersive and interactive technologies. Latest research results and the current state-of-the-art are described with a comprehensive list of references. | ||

The major application domains in XR are presented in | The major application domains in XR are presented in section [[#XR applications]]. Several up-to-date examples are given in order to demonstrate the capabilities of this technology. | ||

In | In section [[#Standards]], the relevant standards and the current state is described. Finally, in section [[#Review of current EC research]], a detailed overview of EC projects is given that were or are still active in the domain of XR technologies. The projects are clustered in different application domains, which demonstrate the widespread applicability of immersive and interactive technologies. | ||

= The scope of eXtended Reality = | = The scope of eXtended Reality = | ||

| Line 21: | Line 21: | ||

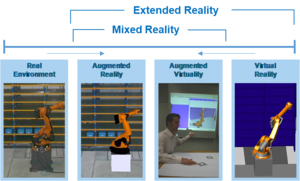

Mixed Reality (MR) includes both AR and AV. It blends real and virtual worlds to create complex environments, where physical and digital elements can interact in real-time. It is defined as a continuum between the real and the virtual environments but excludes both of them. | Mixed Reality (MR) includes both AR and AV. It blends real and virtual worlds to create complex environments, where physical and digital elements can interact in real-time. It is defined as a continuum between the real and the virtual environments but excludes both of them. | ||

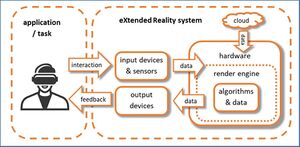

An important question to answer is how broad the term eXtented Reality (XR) spans across technologies and application domains. XR could be considered as a fusion of AR, AV, and VR technologies, but in fact it involves many more technology domains. The necessary domains range from sensing the world (such as image, video, sound, haptic), processing the data and rendering. Besides, hardware is involved to sense, capture, track, register, display, and to do many more things. | An important question to answer is how broad the term eXtented Reality (XR) spans across technologies and application domains. XR could be considered as a fusion of AR, AV, and VR technologies, but in fact it involves many more technology domains. The necessary domains range from sensing the world (such as image, video, sound, haptic), processing the data and rendering. Besides, hardware is involved to sense, capture, track, register, display, and to do many more things. | ||

In Figure 2, a simplified schematic diagram of an eXtended Reality system is presented. On the left hand side, the user is performing a task by using an XR application. In section | In Figure 2, a simplified schematic diagram of an eXtended Reality system is presented. On the left hand side, the user is performing a task by using an XR application. In section [[#XR Applications]], a complete overview of all the relevant domains is given covering advertisement, cultural heritage, education and training, industry 4.0, health and medicine, security, journalism, social VR and tourism. The user interacts with the scene and his interaction is captured with a range of input devices and sensors, which can be visual, audio, motion, and many more (see [[#Video capture for XR]] and [[#3D sound capture]]). The acquired data serves as input for the XR hardware where further necessary processing in the render engine is performed (see [[#Render engines and authoring tools]]). For example, the correct view point is rendered or the desired interaction with the scene is triggered. In section [[#Scene analysis and computer vision]] and [[#3D sound processing algorithms]], an overview of the major algorithms and approaches is given. However, not only captured data is used in the render engine, but also additional data that comes from other sources such as edge cloud servers (see [[#Cloud services]]) or 3D data available on the device itself. The rendered scene is then fed back to the user to allow him sensing the scene. This is achieved by various means such as XR headsets or other types of displays and other sensorial stimuli. | ||

The complete set of technologies and applications will be described in the following chapters. | The complete set of technologies and applications will be described in the following chapters. | ||

[[File:XR System v1.0.jpg|alt=|center|thumb|Figure 2: Major components of an eXtended Reality system.]] | [[File:XR System v1.0.jpg|alt=|center|thumb|Figure 2: Major components of an eXtended Reality system.]] | ||

| Line 31: | Line 31: | ||

==Market development and forecast == | ==Market development and forecast == | ||

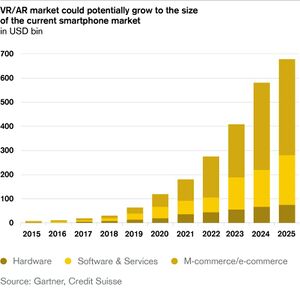

Market research experts all agree on the tremendous growth potential for the XR market. The global AR and VR market by device, offering, application, and vertical, was valued at around USD 26.7 billion in 2018 by Zion Market Research. According to the report issued in February 2019, the global market is expected to reach approximately USD 814.7 billion by 2025, at a compound annual growth rate (CAGR) of 63.01% between 2019 and 2025 <ref>Zion Market Research. https://www.zionmarketresearch.com/report/augmented-and-virtual-reality-market (accessed Nov. 11, 2020)</ref>. With over 65% in a forecast period from 2019 to 2024, similar annual growth rates are expected by Mordorintelligence <ref></ref> | Market research experts all agree on the tremendous growth potential for the XR market. The global AR and VR market by device, offering, application, and vertical, was valued at around USD 26.7 billion in 2018 by Zion Market Research. According to the report issued in February 2019, the global market is expected to reach approximately USD 814.7 billion by 2025, at a compound annual growth rate (CAGR) of 63.01% between 2019 and 2025 <ref>Zion Market Research. https://www.zionmarketresearch.com/report/augmented-and-virtual-reality-market (accessed Nov. 11, 2020)</ref>. With over 65% in a forecast period from 2019 to 2024, similar annual growth rates are expected by Mordorintelligence <ref name=":0">“Extended Reality (XR) Market - Growth, trends, and forecast.” Mordor Intelligence. <nowiki>https://www.mordorintelligence.com/industry-reports/extended-reality-xr-market</nowiki> (accessed Nov. 11, 2020).</ref>. It is assumed that the convergence of smartphones, mobile VR headsets, and AR glasses into a single XR wearable could replace all the other screens, ranging from mobile devices to smart TV screens. Mobile XR has the potential to become one of the world’s most ubiquitous and disruptive computing platforms. Forecasts by MarketsandMarkets <ref name=":1">“Augmented Reality Market worth $72.7 billion by 2024.” Marketsandmarkets. <nowiki>https://www.marketsandmarkets.com/PressReleases/augmented-reality.asp</nowiki> (accessed Nov. 11, 2020).</ref><ref name=":2">“Virtual Reality Market worth $20.9 billion by 2025.” Marketsandmarkets. <nowiki>https://www.marketsandmarkets.com/PressReleases/ar-market.asp</nowiki> (accessed Nov. 11, 2020).</ref> individually expect the AR and VR markets by offering, device type, application, and geography, to reach USD 72.7 billion by 2024 (AR, valued at USD 10.7 billion in 2019) and USD 20.9 billion (VR, valued at USD 6.1 billion in 2020) by 2025. Gartner and Credit Suisse <ref name=":27">U. Neumann. “Virtual and Augmented Reality have great growth potential.” Credit Suisse. <nowiki>https://www.credit-suisse.com/ch/en/articles/private-banking/virtual-und-augmented-reality-201706.html</nowiki> (accessed Nov. 11, 2020).</ref><ref name=":3">U. Neumann. “Increased integration of augmented and virtual reality across industries.” Credit Suisse. <nowiki>https://www.credit-suisse.com/ch/en/articles/private-banking/zunehmende-einbindung-von-Virtual-und-augmented-reality-in-allen-branchen-201906.html</nowiki> (accessed Nov. 11, 2020).</ref> predict significant market growth for VR & AR hardware and software due to promising opportunities across sectors up to 600-700 billion USD in 2025 (see Figure 3). With 762 million users owning an AR-compatible smartphone in July 2018, the AR consumer segment is expected to grow substantially, also fostered by AR development platforms such as ARKit (Apple) and ARCore (Google). | ||

[[File:VR AR market forecast Gartner.jpg|center|thumb|Figure 3: VR/AR market forecast by Gartner and Credit Suisse]] | [[File:VR AR market forecast Gartner.jpg|center|thumb|Figure 3: VR/AR market forecast by Gartner and Credit Suisse<ref name=":27" />]] | ||

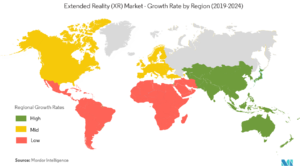

[[File:Figure 4- Market growth rates by worldwide regions .png|thumb|Figure 4: Market growth rates by worldwide regions ]] | [[File:Figure 4- Market growth rates by worldwide regions .png|thumb|Figure 4: Market growth rates by worldwide regions<ref name=":0" /> ]] | ||

Several recent market studies including <ref>< | Several recent market studies including <ref name=":2" /><ref name=":4">Research and Markets. <nowiki>https://www.researchandmarkets.com/reports/4746768/virtual-reality-market-by-offering-technology</nowiki> (accessed Nov. 11, 2020).</ref> have factored in the COVID-19 impact - yet to fully manifest itself - identifying growth drivers and barriers. Technavio forecasts a CAGR of over 35% and a market growth of $ 125.19 billion during 2020-2024 <ref name=":5">Businesswire. <nowiki>https://www.businesswire.com/news/home/20200903005356/en/COVID-19-Impacts-Augmented-Reality-AR-and-Virtual-Reality-VR-Market-Will-Accelerate-at-a-CAGR-of-Over-35-Through-2020-2024</nowiki> -The-Increasing-Demand-for-AR-and-VR-Technology-to-Boost-Growth-Technavio (accessed Nov. 11, 2020).</ref>. Growth driving factors are identified as the increasing demand for AR/VR technology, e.g. an increasing demand for VR/AR HMDs in the healthcare sector <ref name=":4" /><ref>“Impact analysis of covid-19 on augmented reality (AR) in healthcare market.” Researchdive. <nowiki>https://www.researchdive.com/covid-19-insights/218/global-augmented-reality-ar-in-healthcare-market</nowiki> (accessed Nov. 11, 2020).</ref>, or in general for remote work <ref name=":6">“Augmented and Virtual Reality: Visualizing Potential Across Hardware, Software, and Services.” ABIresearch. <nowiki>https://www.abiresearch.com/whitepapers/</nowiki> (accessed Nov. 11, 2020).</ref> and socializing <ref name=":7">Digi-Capital. <nowiki>https://www.digi-capital.com/news/2020/04/how-covid-19-change-ar-vr-future/</nowiki> (accessed Nov. 11, 2020).</ref>. Barriers on the other hand are associated with the prevailing potential high cost of XR app development <ref name=":5" />, and COVID-19 adversely impacting the supply chain of the markets <ref name=":2" /> <ref name=":7" /><ref name=":8">M. Koytcheva. “Pandemic makes Extended Reality a hot ticket.” CCS Insight. <nowiki>https://my.ccsinsight.com/reportaction/D17106/Toc</nowiki> (accessed Nov. 11, 20200).</ref>, among others. | ||

Regionally, the annual growth rate will be particularly high in Asia, moderate in North America and Europe, and low in other regions of the world <ref | Regionally, the annual growth rate will be particularly high in Asia, moderate in North America and Europe, and low in other regions of the world <ref name=":0" /><ref name=":4" /> (see Figure 4). MarketsandMarkets finds Asia to lead the VR market by 2024 <ref name=":2" />, and to lead the AR market by 2025 <ref name=":1" />, whereas the US is still dominating the XR market with the large number of global players during the forecast period. | ||

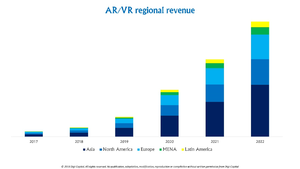

With the XR market growing exponentially, Europe accounts for about one fifth of the market in 2022 | With the XR market growing exponentially, Europe accounts for about one fifth of the market in 2022 <ref name=":28">T. Merel. “Ubiquitous AR to dominate focused VR by 2022.” TechCrunch. <nowiki>https://techcrunch.com/2018/01/25/ubiquitous-ar-to-dominate-focused-vr-by-2022/</nowiki> (accessed Nov. 11, 2020).</ref>, with Asia as the leading region (mainly China, Japan, and South Korea) followed by North America and Europe at almost the same level (see Figure 5). The enquiry in <ref>“European VR and AR market growth to 'outpace' North America by 2023.” Optics.org. <nowiki>https://optics.org/news/10/10/18</nowiki> (accessed Nov. 27, 2020).</ref> sees Europe in 2023 even at second position of worldwide revenue regions (25%) after Asia (51%) followed by North America (17%). In a study about the VR and AR ecosystem in Europe in 2016/2017 <ref name=":9">ECORYS, “Virtual reality and its potential for Europe”, [Online]. Available: <nowiki>https://ec.europa.eu/futurium/en/system/files/ged/vr_ecosystem_eu_report_0.pdf</nowiki> </ref>, Ecorys identified the potential for Europe when playing out its strengths, namely building on its creativity, skills, and cultural diversity. Leading countries in VR development include France, the UK, Germany, The Netherlands, Sweden, Spain, and Switzerland. A lot of potential is seen for Finland, Denmark, Italy, Greece as well as Central and Eastern Europe. In 2017, more than half of the European companies had suppliers and customers from around the world. | ||

[[File:Figure 5- AR-VR regional revenue between 2017 and 2022.png|center|thumb|Figure 5: AR/VR regional revenue between 2017 and 2022 ]] | [[File:Figure 5- AR-VR regional revenue between 2017 and 2022.png|center|thumb|Figure 5: AR/VR regional revenue between 2017 and 2022<ref name=":28" /> ]] | ||

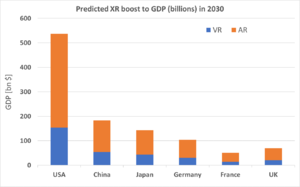

PwC released a study about the impact of AR and VR on the global economy by 2030 | PwC released a study about the impact of AR and VR on the global economy by 2030 <ref name=":10">“Seeing is believing, How VR and AR will transform business and the economy.” PwC. <nowiki>https://www.pwc.com/seeingisbelieving</nowiki> (accessed Nov. 11, 2020).</ref> highlighting the development in several countries. Globally, AR has a higher contribution to gross domestic product (GDP) than VR. The USA is expected to have the highest boost to GDP by 2030, followed by China and Japan Figure 6). | ||

[[File:Figure 6- Global XR boost to gross domestic product by 2030.png|center|thumb|Figure 6: Global XR boost to gross domestic product by 2030 ]] | [[File:Figure 6- Global XR boost to gross domestic product by 2030.png|center|thumb|Figure 6: Global XR boost to gross domestic product by 2030<ref name=":10" /> ]] | ||

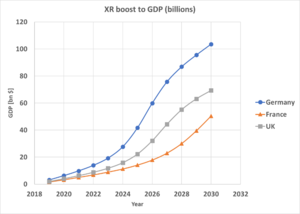

Concerning the major European countries Germany, France and UK, the major XR boost is expected for Germany followed by France and UK (see Figure 7). | Concerning the major European countries Germany, France and UK, the major XR boost is expected for Germany followed by France and UK (see Figure 7). | ||

[[File:Figure 7- Major EU countries XR boost to gross domestic product from 2019 to 2030 .png|center|thumb|Figure 7: Major EU countries XR boost to gross domestic product from 2019 to 2030]] | [[File:Figure 7- Major EU countries XR boost to gross domestic product from 2019 to 2030 .png|center|thumb|Figure 7: Major EU countries XR boost to gross domestic product from 2019 to 2030<ref name=":10" />]] | ||

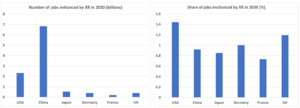

The impact on employment through XR technology adoption will result in a major growth worldwide considering job enhancement (see Figure 8). From nearly 825 000 jobs enhanced in 2019 a rise is expected to more than 23 billion in 2030 worldwide | The impact on employment through XR technology adoption will result in a major growth worldwide considering job enhancement (see Figure 8). From nearly 825 000 jobs enhanced in 2019 a rise is expected to more than 23 billion in 2030 worldwide <ref name=":10" />. China outnumbers all other countries by total numbers. Considering the share of jobs enhanced, the USA, UK and Germany are among the countries expected to experience the largest boost. | ||

[[File:Figure 8- Job enhancement by 2030 through XR.png|center|thumb|Figure 8: Job enhancement by 2030 through XR]] | [[File:Figure 8- Job enhancement by 2030 through XR.png|center|thumb|Figure 8: Job enhancement by 2030 through XR<ref name=":10" />]] | ||

== Areas of application == | == Areas of application == | ||

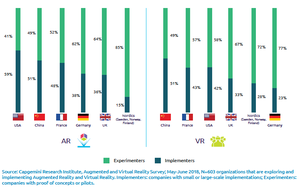

[[File:Figure 9- Distribution of VR-AR companies analysed in survey by Capgemini Research .png|thumb|Figure 9: Distribution of VR/AR companies analysed in survey by Capgemini Research]] | [[File:Figure 9- Distribution of VR-AR companies analysed in survey by Capgemini Research .png|thumb|Figure 9: Distribution of VR/AR companies analysed in survey by Capgemini Research<ref name=":11" />]] | ||

Within the field of business operations and field services, AR/VR implementations are found to be prevalent in four areas, where repair and maintenance have the strongest focus, closely followed by design and assembly. Other popular areas of implementation cover immersive training, and inspection and quality assurance | Within the field of business operations and field services, AR/VR implementations are found to be prevalent in four areas, where repair and maintenance have the strongest focus, closely followed by design and assembly. Other popular areas of implementation cover immersive training, and inspection and quality assurance <ref name=":11">“Augmented and Virtual Reality in Operations: A guide for investment.” Capgemini. <nowiki>https://www.capgemini.com/research-old/augmented-and-virtual-reality-in-operations/</nowiki> (accessed Nov. 11, 2020).</ref>. Benefits from implementing AR/VR technologies include substantial increases in efficiency, safety, productivity, and reduction in complexity. | ||

In a survey conducted in 2018 | In a survey conducted in 2018 <ref name=":11" />, Capgemini Research Institute focused on the use of AR/VR in business operations and field services in the automotive, manufacturing, and utilities sectors; companies considered were located in the US (30%), Germany, UK, France, China (each 15%) and the Nordics (Sweden, Norway, Finland). They found that, among 600+ companies with AR/VR initiatives (experimenting or implementing AR/VR), about half of them expects that AR/VR will become mainstream in their organisation within the next three years, the other half predominantly expects that AR/VR will become mainstream in less than five years. AR hereby is seen as more applicable than VR; consequently, more organisations are implementing AR (45%) than VR (36%). Companies in the US, China, and France are currently leading in implementing AR and VR technologies (see Figure 9). All European countries have less or equal implementers in AR and VR compared to US and China. A diagram relating US and China vs. Europe is not available. | ||

The early adopters of XR technologies in Europe are in the automotive, aviation, and machinery sectors, but the medical sector plays also an important role. R&D focuses on health-care, industrial use and general advancements of this technology | The early adopters of XR technologies in Europe are in the automotive, aviation, and machinery sectors, but the medical sector plays also an important role. R&D focuses on health-care, industrial use and general advancements of this technology <ref name=":11" />. Highly specialised research hubs support the European market growth in advancing VR technology and applications and also generate a highly-skilled workforce, bringing non-European companies to Europe for R&D. Content-wise, the US market is focused on entertainment while Asia is active in content production for the local markets. Europe benefits from its cultural diversity and a tradition of collaboration, in part fostered by European funding policies, leading to very creative content production. | ||

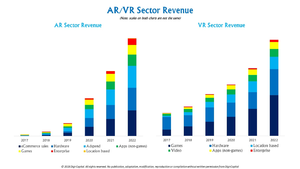

It is also interesting to compare VR and AR with respect to the field of applications (see Figure 10). Due to a smaller installed base, lower mobility and exclusive immersion, VR will be more focussed on entertainment use cases and revenue streams such as in games, location-based entertainment, video, and related hardware, whereas AR will be more based on e-commerce, advertisement, enterprise applications, and related hardware | It is also interesting to compare VR and AR with respect to the field of applications (see Figure 10). Due to a smaller installed base, lower mobility and exclusive immersion, VR will be more focussed on entertainment use cases and revenue streams such as in games, location-based entertainment, video, and related hardware, whereas AR will be more based on e-commerce, advertisement, enterprise applications, and related hardware <ref name=":3" />. | ||

[[File:Figure 10- Separated AR and VR sector revenue from 2017 to 2022 .png|center|thumb|Figure 10: Separated AR and VR sector revenue from 2017 to 2022. | [[File:Figure 10- Separated AR and VR sector revenue from 2017 to 2022 .png|center|thumb|Figure 10: Separated AR and VR sector revenue from 2017 to 2022.<ref name=":3" /> |alt=Note: This diagram represents just relations of revenue between different sectors, the scale for AR and VR are not the same.]] | ||

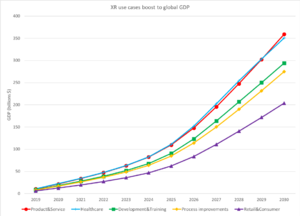

A PwC analysis | A PwC analysis <ref name=":10" /> groups major use cases into five categories: (1) Product and service development; (2) Healthcare; (3) Development and training; (4) Process improvements; (5) Retail and consumer. | ||

Among those, XR technologies for product and service development as well as healthcare are expected to have the highest impact with a potential boost to GDP of over $350 billion by 2030 (see Figure 11). | Among those, XR technologies for product and service development as well as healthcare are expected to have the highest impact with a potential boost to GDP of over $350 billion by 2030 (see Figure 11). | ||

[[File:Figure 11- XR use cases boost to gross domestic product from 2019 to 2030.png|center|thumb|Figure 11: XR use cases boost to gross domestic product from 2019 to 2030 ]] | [[File:Figure 11- XR use cases boost to gross domestic product from 2019 to 2030.png|center|thumb|Figure 11: XR use cases boost to gross domestic product from 2019 to 2030<ref name=":10" /> ]] | ||

== Investments == | == Investments == | ||

[[File:Figure 12- XR4ALL analysis of European investors for start-ups.png|thumb|Figure 12: XR4ALL analysis of European investors for start-ups]] | [[File:Figure 12- XR4ALL analysis of European investors for start-ups.png|thumb|Figure 12: XR4ALL analysis of European investors for start-ups<ref name=":29" />]] | ||

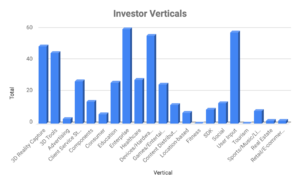

While XR industries are characterised by global value chains, it is important to be aware of the different types of investments available and of the cultural settings present. Favourable conditions for AR/VR start-ups are given in the US through the availability of venture capital towards early technology development. The Asian market growth is driven through concerted government efforts. Digi-Capital has tracked over $5.4 billion XR investments in the 12 months from Q3 2018 to Q2 2019 showing that Chinese companies have invested by a factor of 2.5 more than their North American counterparts during this period | While XR industries are characterised by global value chains, it is important to be aware of the different types of investments available and of the cultural settings present. Favourable conditions for AR/VR start-ups are given in the US through the availability of venture capital towards early technology development. The Asian market growth is driven through concerted government efforts. Digi-Capital has tracked over $5.4 billion XR investments in the 12 months from Q3 2018 to Q2 2019 showing that Chinese companies have invested by a factor of 2.5 more than their North American counterparts during this period <ref>“AR/VR investment and M&A opportunities as startup valuations soften.” Digi-Capital. <nowiki>https://www.digi-capital.com/news/2019/07/ar-vr-investment-and-ma-opportunities-as-early-stage-valuations-soften/</nowiki> (accessed Nov. 11, 2020).</ref>. Investment considerably dropped worldwide over the last 12 months to Q1 2020 <ref>“VR/AR investment at pre-Facebook/Oculus levels in Q1.” Digi-Capital. <nowiki>https://www.digi-capital.com/news/2020/05/vr-ar-investment-pre-facebook-oculus-levels/</nowiki> (accessed Nov. 11, 2020).</ref> with the US and China continuing to dominate XR investment, followed by Israel, the UK and Canada. In Europe, the availability of research funding fostered a tradition in XR research and the creation of niche and high-precision technologies. The XR4ALL Consortium has compiled a list of over 455 investors investing in XR start-ups in Europe <ref name=":29">L. Segers and D. Del Olmo, “Deliverable D5.1 Map of funding sources for XR technologies”, LucidWeb, XR4ALL project, 2019, [Online]. Available: <nowiki>http://xr4all.eu/wp-content/uploads/d5.1-map-of-funding-sources-for-xr-technologies_final-1.pdf</nowiki> (accessed Nov. 11, 2020).</ref>. The investments range from 2008-2019. A preliminary analysis shows that the verticals attracting the greatest numbers of investors are: Enterprise, User Input, Devices/Hardware, and 3D Reality Capture (see Figure 12). | ||

The use cases that are forecasted by IDC to receive the largest investment in 2023 are education/training ($8.5 billion), industrial maintenance ($4.3 billion), and retail showcasing ($3.9 billion) | The use cases that are forecasted by IDC to receive the largest investment in 2023 are education/training ($8.5 billion), industrial maintenance ($4.3 billion), and retail showcasing ($3.9 billion) <ref>“Commercial and public sector investments will drive worldwide AR/VR spending to $160 billion in 2023, according to a new IDC spending guide.” IDC. <nowiki>https://www.idc.com/getdoc.jsp?containerId=prUS45123819</nowiki> (accessed Nov. 11, 2020).</ref>. A total of $20.8 billion is expected to be invested in VR gaming, VR video/feature viewing, and AR gaming. The fastest spending growth is expected for the following: AR for lab and field education, AR for public infrastructure maintenance, and AR for anatomy diagnostic in the medical domain. | ||

== Shipment of devices == | == Shipment of devices == | ||

The shipment of VR headset has steadily been growing for several years and has reached a number of 4 million devices in 2018 | The shipment of VR headset has steadily been growing for several years and has reached a number of 4 million devices in 2018 <ref>H. Tankovska. “Unit shipments of Virtual Reality (VR) devices worldwide from 2017 to 2019 (in millions), by vendor.” Statista. <nowiki>https://www.statista.com/statistics/671403/global-virtual-reality-device-shipments-by-vendor/</nowiki> (accessed Nov. 11, 2020).</ref>. It raised up to around 6 million in 2019 and is mainly dominated by North American companies (e.g. Facebook Oculus) and major Asian manufacturers (e.g. Sony, Samsung, and HTC Vive) (see Figure 13). The growth on the application side is even higher. For instance, at the gaming platform Steam, the yearly growing rate of monthly-connected headsets is up 80% since 2017 <ref>B.Lang. “Analysis: Monthly-connected VR Headsets on Steam Pass 1 Million Milestone.” Road to VR. <nowiki>https://www.roadtovr.com/monthly-connected-vr-headsets-steam-1-million-milestone/</nowiki> (accessed Nov. 11, 2020).</ref>. | ||

The situation is completely different for AR headsets. Compared to VR, the shipments of AR headsets in 2017 were much lower (less than 0.4 million), but the actual growing rate is much higher than for VR headsets | The situation is completely different for AR headsets. Compared to VR, the shipments of AR headsets in 2017 were much lower (less than 0.4 million), but the actual growing rate is much higher than for VR headsets <ref>H. Tankovska. “Smart augmented reality glasses unit shipments worldwide from 2016 to 2022.” Statista. <nowiki>https://www.statista.com/statistics/610496/smart-ar-glasses-shipments-worldwide/</nowiki> (accessed Nov. 11, 2020).</ref> (see Figure 14). In 2019, the number of unit shipments was almost at the same level for AR and VR headsets (about 6 million), and, beyond 2019, it will be much higher for AR. This is certainly, due to the fact that there is a wider range of applications for AR than for VR (see also [[#Areas of application]]). | ||

<gallery mode="packed"> | <gallery mode="packed" widths="300" heights="200" perrow="2"> | ||

File:Figure 13- VR unit shipments in the last three years.png| | File:Figure 13- VR unit shipments in the last three years.png|alt=cited: [24] H. Tankovska. “Unit shipments of Virtual Reality (VR) devices worldwide from 2017 to 2019 (in millions), by vendor.” Statista. https://www.statista.com/statistics/671403/global-virtual-reality-device-shipments-by-vendor/ (accessed Nov. 11, 2020).|Figure 13: VR unit shipments in the last three years | ||

File:Figure 14- Forecast of AR unit shipments from 2016 to 2022 .png| | File:Figure 14- Forecast of AR unit shipments from 2016 to 2022 .png|alt=cited: [26] H. Tankovska. “Smart augmented reality glasses unit shipments worldwide from 2016 to 2022.” Statista. https://www.statista.com/statistics/610496/smart-ar-glasses-shipments-worldwide/ (accessed Nov. 11, 2020).|Figure 14: Forecast of AR unit shipments from 2016 to 2022 | ||

</gallery> | </gallery> | ||

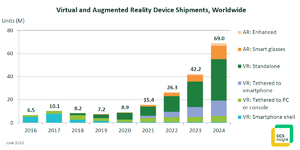

Shipment of VR and AR devices are expected to grow considerably from below 9 million devices in 2020 to more than 50 million devices by 2024 | Shipment of VR and AR devices are expected to grow considerably from below 9 million devices in 2020 to more than 50 million devices by 2024 <ref name=":8" /> (see Figure 15). However, the shipments of smartphone shell VR will decrease and only the shipments of AR, standalone VR and tethered VR devices will increase substantially. Especially, the growth of standalone VR devices seems to be predominant, since the first systems appeared on the market in 2018 and the global players like Oculus and HTC launched their solutions in 2019. ABC Research predicts that over 70% of VR shipments in 2024 will be standalone devices <ref name=":6" />. | ||

[[File:Figure 15- Forecast of VR and AR shipments .png|center|thumb|Figure 15: Forecast of VR and AR shipments ]] | [[File:Figure 15- Forecast of VR and AR shipments .png|center|thumb|Figure 15: Forecast of VR and AR shipments<ref name=":8" /> ]] | ||

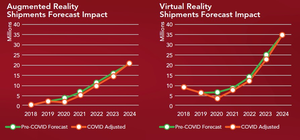

Taking into account COVID-19 impacts, pre-COVID expectations for AR and VR shipments will be reached in 2024 | Taking into account COVID-19 impacts, pre-COVID expectations for AR and VR shipments will be reached in 2024 <ref name=":6" /> (see Figure 16). | ||

[[File:Figure 16- Forecast of VR and AR shipments with-without COVID-19 impact.png|center|thumb|Figure 16: Forecast of VR and AR shipments with/without COVID-19 impact]] | [[File:Figure 16- Forecast of VR and AR shipments with-without COVID-19 impact.png|center|thumb|Figure 16: Forecast of VR and AR shipments with/without COVID-19 impact]] | ||

== Main players == | == Main players == | ||

With a multitude of players from start-ups and SMEs to very large enterprises, the VR/AR market is fragmented <ref>“Augmented and Virtual Reality.” European Commission. <nowiki>https://ec.europa.eu/growth/tools-databases/dem/monitor/category/augmented-and-virtual-reality</nowiki> (accessed Nov. 11, 2020).</ref>, and dominated by US internet giants such as Google, Apple, Facebook, Amazon, and Microsoft. By contrast, European innovation in AR and VR is largely driven by SMEs and start-ups <ref name=":9" />. | |||

With a multitude of players from start-ups and SMEs to very large enterprises, the VR/AR market is fragmented | |||

Main XR players <ref name=":3" /><ref name=":9" /> are from (1) the US (e.g., Google, Microsoft, Oculus, Eon Reality, Vuzix, CyberGlove Systems, Leap Motion, Sensics, Sixsense Enterprises, WorldViz, Firsthand Technologies, Virtuix, Merge Labs, SpaceVR), and (2) Asian Pacific region (e.g., Japan: Sony, Nintendo; South Korea: Samsung Electronics; Taiwan: HTC). Besides the main players, there are plenty of SMEs and smaller companies worldwide. Figure 17 gives a good overview of the AR industry landscape, while in Figure 18, the current VR industry landscape is depicted. | |||

The VR Fund published the VR/AR industry landscapes | In addition to the above corporate activities, Europe also has a long-standing tradition in research <ref name=":9" />. Fundamental questions are generally pursued by European universities such as ParisTech (FR), Technical University of Munich (DE), and King’s College (UK) and by non-university research institutes like B<>com (FR), Fraunhofer Society (DE), and INRIA (FR). Applied research is also relevant, and this is also true for the creative sector. An important part is also played by associations, think tanks, associations and institutions such as EuroXR, Realities Centre (UK), VRBase (NL/DE) and Station F (FR) that connect stakeholders, provide support, and enable knowledge transfer. Research activities tend to concentrate in France, the UK, and Germany, while business activities tend to concentrate in France, Germany, the UK, and The Netherlands. | ||

The VR Fund published the VR/AR industry landscapes <ref name=":30">The Venture Reality Fund. <nowiki>https://www.thevrfund.com/landscapes</nowiki> (accessed Nov. 11, 2020).</ref> providing a good overview of industry players. Besides some of the companies already mentioned, one finds other well-known European XR companies such as: Ultrahaptics (UK), Improbable (UK), Varjo (FI), Meero (FR), CCP Games (IS), Immersive Rehab (UK), and Pupil Labs (DE). Others are Jungle VR, Light & Shadows, Lumiscaphe, Thales, Techviz, Immersion, Haption, Backlight, ac3 studio, ARTE, Diota, TF1, Allegorithmic, Saint-Gobain, Diakse, Wonda, Art of Corner, Incarna, Okio studios, Novelab, Timescope, Adok, Hypersuit, Realtime Robotics, Wepulsit, Holostoria, Artify, VR-bnb, Hololamp (France), and many more.<gallery mode="packed" widths="300" heights="200" perrow="2"> | |||

File:Figure 17- AR Industry Landscape by Venture Reality Fund.png|Figure 17: AR Industry Landscape by Venture Reality Fund | |||

File:Figure 18- VR Industry Landscape by Venture Reality Fund .png|Figure 18: VR Industry Landscape by Venture Reality Fund | |||

</gallery><ref name=":30" /> | |||

== International, European and regional associations in XR == | == International, European and regional associations in XR == | ||

| Line 97: | Line 99: | ||

'''XR Association (XRA)''' | '''XR Association (XRA)''' | ||

The XRA’s mission is to promote responsible development and adoption of virtual and augmented reality globally with best practices, dialogue across stakeholders, and research | The XRA’s mission is to promote responsible development and adoption of virtual and augmented reality globally with best practices, dialogue across stakeholders, and research <ref>XRA. <nowiki>https://xra.org/</nowiki> (accessed Nov. 11, 2020).</ref>. The XRA is a resource for industry, consumers, and policymakers interested in virtual and augmented reality. XRA is an evolution of the Global Virtual Reality Association (GVRA). This association is very much industry-driven due to the memberships of Google, Microsoft, Facebook (Oculus), Sony Interactive Entertainment (PlayStation VR) and HTC (Vive). | ||

'''VR/AR Association (VRARA)''' | '''VR/AR Association (VRARA)''' | ||

The VR/AR Association is an international organisation designed to foster collaboration between innovative companies and people in the VR and AR ecosystem that accelerates growth, fosters research and education, helps develop industry standards, connects member organisations and promotes the services of member companies | The VR/AR Association is an international organisation designed to foster collaboration between innovative companies and people in the VR and AR ecosystem that accelerates growth, fosters research and education, helps develop industry standards, connects member organisations and promotes the services of member companies <ref>VR/AR Association. <nowiki>https://www.thevrara.com/</nowiki> (accessed Nov. 11, 2020).</ref>. The association states over 400 organisations registered as members. VRARA has regional chapters in many countries around the globe. | ||

'''VR Industry Forum (VRIF)''' | '''VR Industry Forum (VRIF)''' | ||

The Virtual Reality Industry Forum | The Virtual Reality Industry Forum <ref>VR-Industry forum. <nowiki>https://www.vr-if.org/</nowiki> (accessed Nov. 11, 2020).</ref> is composed of a broad range of participants from sectors including, but not limited to, movies, television, broadcast, mobile, and interactive gaming ecosystems, comprising content creators, content distributors, consumer electronics manufacturers, professional equipment manufacturers and technology companies. Membership in the VR Industry Forum is open to all parties that support the purposes of the VR Industry Forum. The VR Industry Forum is not a standards development organisation, but will rely on, and liaise with, standards development organisations for the development of standards in support of VR services and devices. Adoption of any of the work products of the VR Industry Forum is voluntary; none of the work products of the VR Industry Forum shall be binding on Members or third parties. | ||

'''THE AREA''' | '''THE AREA''' | ||

The Augmented Reality for Enterprise Alliance (AREA) presents itself as the only global non-profit, member-driven organisation focused on reducing barriers to and accelerating the smooth introduction and widespread adoption of Augmented Reality by and for professionals | The Augmented Reality for Enterprise Alliance (AREA) presents itself as the only global non-profit, member-driven organisation focused on reducing barriers to and accelerating the smooth introduction and widespread adoption of Augmented Reality by and for professionals <ref>Augmented Reality for Enterprise Alliance . <nowiki>https://thearea.org/</nowiki> (accessed Nov. 11, 2020).</ref>. The mission of the AREA is to help companies in all parts of the ecosystem to achieve greater operational efficiency through the smooth introduction and widespread adoption of interoperable AR-assisted enterprise systems. | ||

'''International Virtual Reality Professionals Association (IVRPA)''' | '''International Virtual Reality Professionals Association (IVRPA)''' | ||

The IVRPA mission is to promote the success of Professional VR Photographers and Videographers | The IVRPA mission is to promote the success of Professional VR Photographers and Videographers <ref>IVRPA. <nowiki>https://ivrpa.org/</nowiki> (accessed Nov. 11, 2020).</ref>. We strive to develop and support the professional and artistic uses of 360° panoramas, image-based VR and related technologies worldwide through education, networking opportunities, manufacturer alliances, marketing assistance, and technical support of our member's work. The association currently consists of more than 500 members, either individuals or companies spread among the whole world. | ||

'''The Academy of International Extended Reality (AIXR)''' | '''The Academy of International Extended Reality (AIXR)''' | ||

The AIXR is an international network with strong support by leading small and big companies in the immersive media domain | The AIXR is an international network with strong support by leading small and big companies in the immersive media domain <ref>“The Academy of International Extended Reality”. <nowiki>https://aixr.org/</nowiki> (accessed Nov. 20, 2020).</ref>. The aim is connecting people, projects, and knowledge together and enable growth, nurture talent, and develop standards, bringing wider public awareness and understanding to the international VR & AR industry. A number of advisory groups in different application and technology domains perform focused discussion to foster the progress on their topic. | ||

'''MedVR''' | '''MedVR''' | ||

MedVR is an international network dedicated to the healthcare sector | MedVR is an international network dedicated to the healthcare sector <ref>MedVR. <nowiki>https://medvr.io/</nowiki> (accessed Nov. 20, 2020).</ref>. The aim is to bring together clinicians, scientists, developers, designers, and other experts into interdisciplinary teams to lead the future of augmented and virtual reality (AR & VR) in healthcare. The goal is to educate, stimulate discussion, identify novel applications, and build cutting-edge prototypes. | ||

'''Open AR Cloud Association (OARC)''' | '''Open AR Cloud Association (OARC)''' | ||

The "Open AR Cloud Association" (OARC) is a global non-profit organization registered in Delaware, USA | The "Open AR Cloud Association" (OARC) is a global non-profit organization registered in Delaware, USA <ref>“Open AR Cloud (OARC)” <nowiki>https://www.openarcloud.org/</nowiki> (accessed Nov. 28, 2020).</ref>. Its mission is to drive the development of open and interoperable spatial computing technology, data and standards to connect the physical and digital worlds for the benefit of all. | ||

=== European === | === European === | ||

'''EuroXR''' | '''EuroXR''' | ||

EuroXR is an International non-profit Association | EuroXR is an International non-profit Association <ref>EuroXR. <nowiki>https://www.eurovr-association.org/</nowiki> (accessed Nov. 11, 2020).</ref>, which provides a network for all those interested in Virtual Reality (VR) and Augmented Reality (AR) to meet, discuss and promote all topics related to VR/AR technologies. EuroXR (EuroVR) was founded in 2010 as a continuation of the work in the FP6 Network of Excellence INTUITION (2004 – 2008). The main activity is the organisation of the EuroXR annual event. This series was initiated in 2004 by the INTUITION Network Excellence in Virtual and Augmented Reality, supported by the European Commission until 2008, and incorporated within the Joint Virtual Reality Conferences (JVRC) from 2009 to 2013. Beside individual membership, several organisational members are part of EuroXR such as AVRLab, Barco, List CEA Tech, AFVR, GoTouchVR Haption, catapult, Laval Virtual, VTT, Fraunhofer FIT and Fraunhofer IAO and some European universities. | ||

'''Extended Reality for Education and Research in Academia (XR ERA)''' | '''Extended Reality for Education and Research in Academia (XR ERA)''' | ||

XR ERA was recently founded in 2020 by Leiden University, Centre for Innovation | XR ERA was recently founded in 2020 by Leiden University, Centre for Innovation <ref>“Extended Reality for Education and Research in Academia”. <nowiki>https://xrera.eu/</nowiki> (accessed Nov. 20, 2020).</ref>. The aim is to bring people from education, research and industry together, both online and offline to enhance education and research in academia by making use of what XR has to offer. | ||

'''Women in Immersive Technologies Europe (WiiT Europe)''' | '''Women in Immersive Technologies Europe (WiiT Europe)''' | ||

WiiT Europe is a European non-profit organization that aims to empower women by promoting diversity, equality and inclusion in VR, AR, MR and other future immersive technologies | WiiT Europe is a European non-profit organization that aims to empower women by promoting diversity, equality and inclusion in VR, AR, MR and other future immersive technologies <ref>“Women in Immersive Tech”. <nowiki>https://www.wiiteurope.org/</nowiki> (accessed Nov. 20, 2020).</ref>. Started in 2016 as a Facebook group, WiiT Europe is an inclusive network of talented women who are driving Europe’s XR sectors. | ||

=== National === | === National === | ||

'''ERSTER DEUTSCHER FACHVERBAND FÜR VIRTUAL REALITY (EDFVR)''' | '''ERSTER DEUTSCHER FACHVERBAND FÜR VIRTUAL REALITY (EDFVR)''' | ||

The EDFVR is the first German business association for immersive media | The EDFVR is the first German business association for immersive media <ref>EDFVR e.V.. <nowiki>http://edfvr.org/</nowiki> (accessed Nov. 11, 2020).</ref>. Start-ups and established entrepreneurs, enthusiasts and developers from Germany are joined together to foster immersive media in Germany. | ||

'''Virtual Reality e.V. Berlin Brandenburg (VRBB)''' | '''Virtual Reality e.V. Berlin Brandenburg (VRBB)''' | ||

VRBB is a publicly-funded association dedicated to advancing the virtual, augmented and mixed reality industries | VRBB is a publicly-funded association dedicated to advancing the virtual, augmented and mixed reality industries <ref>VRBB. <nowiki>https://virtualrealitybb.org/</nowiki> (accessed Nov. 11, 2020).</ref>. The association was founded in 2016 and its members are HighTech companies, established Media Companies, Research Institutes and Universities, Start-Ups, Freelancers and plain VR enthusiasts. The VRBB organises a yearly event named VRNowCon since 2016, which has an international reach of participants. | ||

'''Virtual Dimension Center (VDC)''' | '''Virtual Dimension Center (VDC)''' | ||

VDC considers itself as the largest B2B network for XR technologies in Germany | VDC considers itself as the largest B2B network for XR technologies in Germany <ref>Virtual Dimension Center (VDC). <nowiki>https://www.vdc-fellbach.de/en/</nowiki> (accessed Nov. 20, 2020).</ref>. It was founded in 2020 and consists of currently 90 members from industry, IT, research and higher-education. The focus is on Virtual Engineering, Virtual Reality and 3D-simulation. The VDC offers a communication platform for members, a knowledge database, networking, and support for funding acquisition. | ||

'''Virtual and Augmented Reality Association Austria (VARAA)''' | '''Virtual and Augmented Reality Association Austria (VARAA)''' | ||

VARAA is the independent association of professional VR/AR users and companies in Austria | VARAA is the independent association of professional VR/AR users and companies in Austria <ref>GEN Summit. <nowiki>https://www.gensummit.org/sponsor/varaa/</nowiki> (accessed Nov. 11, 2020).</ref>. The aim is to promote, raise awareness and support in handling VR/AR. The association represents the interests of the industry and links professional users and developers. Through a strong network of partners and industry contacts it is the single point of contact in Austria to the international VR/AR scene and the global VR/AR Association (VRARA Global). | ||

'''AFXR (France)''' | '''AFXR (France)''' | ||

The AFXR was born from the merger in 2019 of two major French associations AFVR and Uni-XR | The AFXR was born from the merger in 2019 of two major French associations AFVR and Uni-XR <ref>AFXR. <nowiki>https://www.afxr.org</nowiki> (accessed Nov. 19, 2020).</ref>. It aims to bring together the community of French professionals working in immersive technologies or using XR technologies. The association is neutral, non-commercial and is not affiliated to any economic, territorial or political body. It has over 200 members. | ||

'''Virtual Reality Finland''' | '''Virtual Reality Finland''' | ||

The goal of the association is to help Finland become a leading country in VR and AR technologies | The goal of the association is to help Finland become a leading country in VR and AR technologies <ref>Virtual Reality Finland ry. <nowiki>https://vrfinland.fi</nowiki> (accessed Nov. 11, 2020).</ref>. The association is open to everyone interested in VR and AR. The association organises events, supports VR and AR projects and shares information on the state and development of the ecosystem. | ||

'''Finnish Virtual Reality Association (FIVR)''' | '''Finnish Virtual Reality Association (FIVR)''' | ||

The purpose of the Finnish Virtual Reality Association is to advance virtual reality (VR) and augmented reality (AR) development and related activities in Finland | The purpose of the Finnish Virtual Reality Association is to advance virtual reality (VR) and augmented reality (AR) development and related activities in Finland <ref>FIVR. <nowiki>https://fivr.fi/</nowiki> (accessed Nov. 11, 2020).</ref>. The association is for professionals and hobbyists of virtual reality. FIVR is a non-profit organisation dedicated to advancing the state of Virtual, Augmented and Mixed Reality development in Finland. The goal is to make Finland a world leading environment in XR activities. This happens by establishing a multidisciplinary and tightly-knit developer community and a complete, top-quality development ecosystem, which combines the best resources, knowledge, innovation and strength of the public and private sectors. | ||

'''XR Nation (Finland)''' | '''XR Nation (Finland)''' | ||

Starting in the spring of 2018 in Helsinki, Finland, XR Nation's goal has always been to bring the AR & VR communities in the Nordics and Baltic region closer together | Starting in the spring of 2018 in Helsinki, Finland, XR Nation's goal has always been to bring the AR & VR communities in the Nordics and Baltic region closer together <ref>XRNATION. <nowiki>https://www.xrnation.com/</nowiki> (accessed Nov. 19, 2020).</ref>. XR Nation counts 500+ members and 80+ companies. | ||

'''VIRTUAL SWITZERLAND''' | '''VIRTUAL SWITZERLAND''' | ||

This Swiss association has more than 60 members from academia and industry | This Swiss association has more than 60 members from academia and industry <ref>Virtual Switzerland. <nowiki>http://virtualswitzerland.org/</nowiki> (accessed Nov. 11, 2020).</ref>. It promotes immersive technologies and simulation of virtual environments (XR), their developments and implementation. It aims to foster research-based innovation projects, dialogue and knowledge exchange between academic and industrial players across all economic sectors. It gathers minds and creates links to foster ideas via its nation-wide professional network and facilitates the genesis of projects and their applications to Innosuisse for funding opportunities. | ||

'''Immerse UK''' | '''Immerse UK''' | ||

Immerse UK is the UK’s leading membership organisation for immersive technologies | Immerse UK is the UK’s leading membership organisation for immersive technologies <ref>ImmerseUK. <nowiki>https://www.immerseuk.org/</nowiki> (accessed Nov. 19, 2020).</ref>. They bring together industry, research and academic organisations, public sector and innovators to help fast-track innovation, R&D, scalability and company growth. They are the UK’s only membership organisation dedicated to supporting content, applications, services and solution providers developing immersive technology solutions or companies creating content or experiences using immersive tech. | ||

'''VRINN (Norway)''' | '''VRINN (Norway)''' | ||

VRINN is a cluster of companies operating in Norway in the fields of VR, AR, and gamification | VRINN is a cluster of companies operating in Norway in the fields of VR, AR, and gamification <ref>VRINN. <nowiki>https://vrinn.no/</nowiki> (accessed Nov. 23, 2020).</ref>. The aim of the cluster is to offer its members a platform to exchange ideas, develop projects and thus jointly advance the development of future learning. VRINN also helps companies to network internationally, to market themselves and to develop further. Since 2017, VRINN organizes the VR Nordic Forum, a conference focusing on “immersive learning technologies” – the use of VR & AR in learning, training and storytelling. With 750 participants gathered during the last edition in October 2020, VR Nordic Forum is the biggest XR event in northern Europe. | ||

== Patents == | == Patents == | ||

The number of patents filed is a useful indicator for technology development. In a working paper by Eurofound the years 2010 for AR and 2014 for VR are identified as the starting years for increased patent activity | The number of patents filed is a useful indicator for technology development. In a working paper by Eurofound the years 2010 for AR and 2014 for VR are identified as the starting years for increased patent activity <ref>Eurofound, Game-changing technologies: Transforming production and employment in Europe, Luxembourg: Publications Office of the European Union, 2020.</ref>. Analysing patent data until 2017, the USA emerges as leader in patent applications, followed by China. The XR4ALL consortium recently carried out a study using the database available at the European Patent Office <ref>Espacenet. <nowiki>https://worldwide.espacenet.com</nowiki> (accessed Nov. 11, 2020).</ref>. The database was searched for the period 2019 until today and the search was limited to the following keywords: Virtual Reality, Augmented Reality, immersive, eXtended Reality, Mixed Reality, haptic. The most relevant 50 European patents have been selected and listed in the table below. The publication dates in the rightmost column of the table below range between April 15th, 2019 and October 1st, 2020. | ||

{| class="wikitable" | {| class="wikitable" | ||

|'''#''' | |'''#''' | ||

| Line 516: | Line 518: | ||

An intermediate category is labelled as 3-DoF+. It is similar to 360-degree video with 3-DoF, but it additionally supports head motion parallax. Here too, the observer stands at the centre of the scene, but he/she can move his/her head, allowing him/her to look slightly to the sides and behind near objects. The benefit of 3-DoF+ is an advanced and more natural viewing experience, especially in case of stereoscopic 3D video panoramas. | An intermediate category is labelled as 3-DoF+. It is similar to 360-degree video with 3-DoF, but it additionally supports head motion parallax. Here too, the observer stands at the centre of the scene, but he/she can move his/her head, allowing him/her to look slightly to the sides and behind near objects. The benefit of 3-DoF+ is an advanced and more natural viewing experience, especially in case of stereoscopic 3D video panoramas. | ||

Finally, for the creation of 3D data from real scenes, the outside-in capture approach is used. The observer can freely move through the scene while looking around. The interaction allows six degrees of freedom (6DoF), the three directions of translation plus the 3 Euler angles. In this category, several sensors fall in, such as (1) multi-view cameras including light-field cameras, depth, and range sensors, RGB-D cameras, and (2) complex multi-view volumetric capture systems. A good overview on VR technology and related capture approaches is presented in | Finally, for the creation of 3D data from real scenes, the outside-in capture approach is used. The observer can freely move through the scene while looking around. The interaction allows six degrees of freedom (6DoF), the three directions of translation plus the 3 Euler angles. In this category, several sensors fall in, such as (1) multi-view cameras including light-field cameras, depth, and range sensors, RGB-D cameras, and (2) complex multi-view volumetric capture systems. A good overview on VR technology and related capture approaches is presented in <ref>C. Anthes, R. J. García-Hernández, M. Wiedemann and D. Kranzlmüller, "State of the art of virtual reality technology," 2016 IEEE Aerospace Conference, Big Sky, MT, 2016, pp. 1-19. | ||

doi: 10.1109/AERO.2016.7500674.</ref><ref>State of VR. <nowiki>http://stateofvr.com/</nowiki> (accessed Nov. 11, 2020).</ref><ref>“3DOF, 6DOF, RoomScale VR, 360 Video and Everything In Between.” Packet39. <nowiki>https://packet39.com/blog/2018/02/25/3dof-6dof-roomscale-vr-360-video-and-everything-in-between/</nowiki> (accessed Nov. 11, 2020).</ref>. | |||

=== 360-degree video (3-DoF) === | === 360-degree video (3-DoF) === | ||

Panoramic 360-degree video is certainly one of the most exciting viewing experiences when watched through VR glasses. However, today’s technology still suffers from some technical restrictions. | Panoramic 360-degree video is certainly one of the most exciting viewing experiences when watched through VR glasses. However, today’s technology still suffers from some technical restrictions. | ||

One restriction can be explained very well by referring to the capabilities of the human vision system. It has a spatial resolution of about 60 pixels per degree. Hence, a panoramic capture system requires a resolution of more than 20,000 pixel (20K) at the full 360-degree horizon and meridian, the vertical direction. Current state-of-art commercial panoramic video cameras are far below this limit, ranging from 2,880 pixel horizontal resolution (Kodak SP360 4K Dual Pro, 360 Fly 4K) via 4,096 pixel (Insta360 4K) up to 11k pixel (Insta360 Titan). In | One restriction can be explained very well by referring to the capabilities of the human vision system. It has a spatial resolution of about 60 pixels per degree. Hence, a panoramic capture system requires a resolution of more than 20,000 pixel (20K) at the full 360-degree horizon and meridian, the vertical direction. Current state-of-art commercial panoramic video cameras are far below this limit, ranging from 2,880 pixel horizontal resolution (Kodak SP360 4K Dual Pro, 360 Fly 4K) via 4,096 pixel (Insta360 4K) up to 11k pixel (Insta360 Titan). In <ref>L. Brown. “Top 10 professional 360 degree cameras.” Wondershare. <nowiki>https://filmora.wondershare.com/virtual-reality/top-10-professional-360-degree-cameras.html</nowiki> (accessed Nov. 11, 2020).</ref>, a recent overview on the top ten 360-degree video cameras is presented, which all offer monoscopic panoramic video. | ||

Fraunhofer HHI has already developed an omni-directional 360-degree video camera with 10K resolution in 2016. This camera uses a mirror system together with 10 single HD camera along the horizon and one 4K camera for the zenith. Upgrading it completely to 4K cameras would even support the required 20K resolution at the horizon. The capture system of this camera also includes real-time stitching and online preview of the panoramic video in full resolution | Fraunhofer HHI has already developed an omni-directional 360-degree video camera with 10K resolution in 2016. This camera uses a mirror system together with 10 single HD camera along the horizon and one 4K camera for the zenith. Upgrading it completely to 4K cameras would even support the required 20K resolution at the horizon. The capture system of this camera also includes real-time stitching and online preview of the panoramic video in full resolution <ref>“OmniCam-360”. Fraunhofer HHI. <nowiki>https://www.hhi.fraunhofer.de/en/departments/vit/technologies-and-solutions/capture/panoramic-uhd-video/omnicam-360.html</nowiki> (accessed Nov. 11, 2020).</ref>. | ||

However, the maximum capture resolution is just one aspect. A major bottleneck concerning 360-degree video quality is the restricted display resolution of the existing VR headsets. Supposing that the required field of view is 120 degrees in the horizontal direction and 60 degrees in the vertical direction, VR headsets need two displays, one for each eye, each with a resolution of 8K by 4K. As discussed in section | However, the maximum capture resolution is just one aspect. A major bottleneck concerning 360-degree video quality is the restricted display resolution of the existing VR headsets. Supposing that the required field of view is 120 degrees in the horizontal direction and 60 degrees in the vertical direction, VR headsets need two displays, one for each eye, each with a resolution of 8K by 4K. As discussed in section [[#Input and output devices]], this is far away from what VR headsets can achieve today. | ||

=== Head motion parallax (3-DoF+) === | === Head motion parallax (3-DoF+) === | ||

A further drawback of 360-degree video is the missing capability of head motion parallax. In fact, 360-degree video with 3DoF is only sufficient for monocular video panoramas, or for stereoscopic-3D panoramic views with far objects only. In case of stereo 3D with near objects, the viewing condition is confusing, because it is different from what humans are accustomed to from real-world viewing. | A further drawback of 360-degree video is the missing capability of head motion parallax. In fact, 360-degree video with 3DoF is only sufficient for monocular video panoramas, or for stereoscopic-3D panoramic views with far objects only. In case of stereo 3D with near objects, the viewing condition is confusing, because it is different from what humans are accustomed to from real-world viewing. | ||

Nowadays, a lot of VR headsets support local on-board head tracking (see | Nowadays, a lot of VR headsets support local on-board head tracking (see [[#VR Headsets]]). This allows for enabling head motion parallax while viewing a 360-degree panoramic video in VR headsets. To support this option, capturing often combines photorealistic 3D scene compositions with segmented stereoscopic videos. For example, one or more stereoscopic videos are recorded and keyed in a green screen studio. In parallel, the photorealistic scene is generated by 3D modelling methods like photogrammetry (see [[#Multi-camera geometry]] and [[#3D Reconstruction]]). Then, the separated stereoscopic video samples are placed at different locations into the above-mentioned photorealistic 3D scene, probably in combination with additional 3D graphic objects. The whole composition is displayed as a 360-degree stereo panorama in a tracked VR headset via usual render engines. The user can slightly look behind the inserted video objects while moving the head and, hence, gets the natural impression of head motion parallax. | ||

Such a 3-DoF+ experience was shown for the first time by Intel in cooperation with Hype VR in January 2017 at CES as a so-called walk-around VR video experience. This experience featured a stereoscopic outdoor panorama from Vietnam with a moving water buffalo and some static objects presented in stereo near to the viewer | Such a 3-DoF+ experience was shown for the first time by Intel in cooperation with Hype VR in January 2017 at CES as a so-called walk-around VR video experience. This experience featured a stereoscopic outdoor panorama from Vietnam with a moving water buffalo and some static objects presented in stereo near to the viewer <ref>“Intel demos world's first 'walk-around' VR video experience”. Intel, <nowiki>https://www.youtube.com/watch?v=DFobWjSYst4</nowiki> (accessed Nov. 11, 2020).</ref>. The user could look behind the near objects while moving the head. Similar and more sophisticated experiences have later been shown, e.g., by Sony, Lytro, and others. Likely the most popular one is the Experience “Tom Grennan VR” that was presented for the first time in July 2018 by Sony on PlayStation VR. Tom Grennan and his band have been recorded in stereo in a green screen studio and have then been placed in a photorealistic 3D reconstruction of a real music studio that has been scanned by Lidar technology beforehand. | ||

=== 3D capture of static objects and scenes (6-DoF) === | === 3D capture of static objects and scenes (6-DoF) === | ||

The 3D capture of objects and scenes has reached a mature state to allow professionals and amateurs to create and manipulate large amount of 3D data such as point clouds and meshes. The capture technology can be classified into active and passive ones. On the active sensor side, laser or LIDAR (light detection and ranging), time-of-flight, and structured-light techniques can be mentioned. Photogrammetry is the passive 3D capture approach that relies on multiple images of an object or a scene captured with a camera from different viewpoints. Especially the increase in quality and resolution of cameras developed the use of photogrammetry. A recent overview can be found in | The 3D capture of objects and scenes has reached a mature state to allow professionals and amateurs to create and manipulate large amount of 3D data such as point clouds and meshes. The capture technology can be classified into active and passive ones. On the active sensor side, laser or LIDAR (light detection and ranging), time-of-flight, and structured-light techniques can be mentioned. Photogrammetry is the passive 3D capture approach that relies on multiple images of an object or a scene captured with a camera from different viewpoints. Especially the increase in quality and resolution of cameras developed the use of photogrammetry. A recent overview can be found in <ref>F. Fadli, H. Barki, P. Boguslawski, L. Mahdjoubi, “3D Scene Capture: A Comprehensive Review of Techniques and Tools for Efficient Life Cycle Analysis (LCA) and Emergency Preparedness (EP) Applications,” ''presented at International Conference on Building Information Modelling (BIM) in Design, Construction and Operations'', Bristol, UK, 2015, doi: 10.2495/BIM150081.</ref>. The maturity of the technology led to a number of commercial 3D body scanners available on the market, ranging from 3D scanning booth and 3D scan cabins to body scanning rigs, body scanners with a rotating platform, and even home body scanners embedded in a mirror, all for single-person use <ref>“The 8 best 3D body scanners in 2020.” Aniwaa. <nowiki>https://www.aniwaa.com/best-3d-body-scanners/</nowiki> (accessed Nov. 11, 2020).</ref>. | ||

=== 3D capture of volumetric video (6DoF) === | === 3D capture of volumetric video (6DoF) === | ||

The techniques from section | The techniques from section [[#3D capture of static objects and scenes (6-DoF)]] are limited to static scenes and objects. For dynamic scenes, static objects can be animated by scripts or motion capture system, and a virtual camera can be navigated through the static 3D scene. However, the modelling and animation process of moving characters is time consuming and often it cannot really represent all moving details of a real human, especially facial expressions and the motion of clothes. | ||

In contrast to these conventional methods, volumetric video is a new technique that scans humans, in particular actors, with plenty of cameras from different directions, often in combination with active depth sensors. During a complex post-production process that we describe in | In contrast to these conventional methods, volumetric video is a new technique that scans humans, in particular actors, with plenty of cameras from different directions, often in combination with active depth sensors. During a complex post-production process that we describe in section [[#Volumetric Video]], this large amount of initial data is then merged to a dynamic 3D mesh representing a full free-viewpoint video. It has the naturalism of high-quality video, but it is a 3D object where a user can walk around in the virtual 3D scene. | ||

In recent years, a number of volumetric studios have been created that are able to produce high-quality volumetric videos. Usually the subject of the volumetric video is the entire human body, but some volumetric studios provide specific solutions designed to handle explicitly the human face | In recent years, a number of volumetric studios have been created that are able to produce high-quality volumetric videos. Usually the subject of the volumetric video is the entire human body, but some volumetric studios provide specific solutions designed to handle explicitly the human face <ref>OTOY. <nowiki>https://home.otoy.com/capture/lightstage/</nowiki> (accessed Nov. 11, 2020).</ref>. The volumetric video can be viewed in real-time from a continuous range of viewpoints chosen at any time during playback. Most studios focus on a capture volume that is viewed spherically in 360 degrees from the outside. A large number of cameras are placed around the scene (e.g. in studios from 8i <ref>8i. <nowiki>http://8i.com</nowiki> (accessed Nov. 11, 2020).</ref>, Volucap <ref name=":12">Volucap. <nowiki>http://www.volucap.de</nowiki> (accessed Nov. 11, 2020).</ref>, 4DViews <ref>4DViews. <nowiki>http://www.4dviews.com</nowiki> (accessed Nov. 11, 2020).</ref>, Evercoast <ref>Evercoast. <nowiki>https://evercoast.com/</nowiki> (accessed Nov. 11, 2020).</ref>, HOLOOH <ref>HOLOOH. <nowiki>https://www.holooh.com/</nowiki> (accessed Nov. 11, 2020).</ref>, and Volograms <ref>Volograms. <nowiki>https://volograms.com/</nowiki> (accessed Nov. 11, 2020).</ref>) providing input for volumetric video similar to frame-by-frame photogrammetric reconstruction of the actors, while Microsoft's Mixed reality Capture Studios <ref>Microsoft. <nowiki>http://www.microsoft.com/en-us/mixed-reality/capture-studios</nowiki> (accessed Nov. 11, 2020).</ref> additionally rely on active depth sensors for geometry acquisition. In order to separate the scene from the background, all studios are equipped with green screens for chroma keying. Only Volucap <ref name=":12" /> uses a bright backlit background to avoid green spilling effects in the texture and to provide diffuse illumination. This concept is based on a prototype system developed by Fraunhofer HHI <ref>O. Schreer, I. Feldmann, S. Renault, M. Zepp, P. Eisert, P. Kauff, “Capture and 3D Video Processing of Volumetric Video”, ''2019 IEEE International Conference on Image Processing (ICIP)'', Taipei, Taiwan, Sept. 2019.</ref>. | ||

=== Notes === | === Notes === | ||

<references /> | |||

== 3D sound capture == | == 3D sound capture == | ||

| Line 550: | Line 555: | ||

=== Human sound perception === | === Human sound perception === | ||

To classify 3D sound capture techniques, it is important to understand how human sound perception works. The brain uses different stimuli when locating the direction of a sound. The most well-known is probably the interaural level difference (ILD) of a soundwave entering the left and right ears. Because low frequencies are bend around the head, the human brain can only locate a sound source through ILD, if this sound contains frequencies higher than 15,00Hz | To classify 3D sound capture techniques, it is important to understand how human sound perception works. The brain uses different stimuli when locating the direction of a sound. The most well-known is probably the interaural level difference (ILD) of a soundwave entering the left and right ears. Because low frequencies are bend around the head, the human brain can only locate a sound source through ILD, if this sound contains frequencies higher than 15,00Hz <ref name=":13">Schnupp, J., Nelken, I., and King, A., ''Auditory neuroscience: Making sense of sound'', MIT Press, 2011</ref>. To locate sound sources containing lower frequencies, the brain uses the interaural time difference (ITD). The time difference between sound waves arriving at the left and right ears is used to determine the direction of a sound <ref name=":13" />. Due to the symmetric positioning of the human ears in the same horizontal plane, these differences only allow one to locate the sound in the horizontal plane but not in the vertical direction. Also with these stimuli, the human sound perception cannot distinguish between soundwaves that come from the front or from the back. For an exact further analysis of the sound direction, the Head-Related Transfer Function (HRTF) is used. This function describes the filtering effect of the human body, especially of the head and the outer ear. Incoming sound waves are reflected and absorbed at the head surface in a way that depends from their directions, therefore the filtering effect changes as a function of the direction of the sound source. The brain learns and uses these resonance and attenuation patterns to localise sound sources in three-dimensional space. Again see <ref name=":13" /> for a more detailed description. | ||

=== 3D microphones === | === 3D microphones === | ||

Using the ILD and ITD stimuli as well as specific microphone arrangements, classical stereo microphone setups can be extended and combined to capture 360-degree sound (only in horizontal plane) or truly 3D sound. Complete microphone systems are Schoeps IRT-Cross, Schoeps ORTF Surround, Schoeps ORTF-3D, Nevaton BPT, Josephson C700S, Edge Quadro. Furthermore, any custom microphone setup can be used in combination with a spatial encoder software tool. As an example, Fraunhofer upHear is a software library to encode the audio output from any microphone setup into a spatial audio format | Using the ILD and ITD stimuli as well as specific microphone arrangements, classical stereo microphone setups can be extended and combined to capture 360-degree sound (only in horizontal plane) or truly 3D sound. Complete microphone systems are Schoeps IRT-Cross, Schoeps ORTF Surround, Schoeps ORTF-3D, Nevaton BPT, Josephson C700S, Edge Quadro. Furthermore, any custom microphone setup can be used in combination with a spatial encoder software tool. As an example, Fraunhofer upHear is a software library to encode the audio output from any microphone setup into a spatial audio format <ref>Fraunhofer IIS. <nowiki>https://www.iis.fraunhofer.de/en/ff/amm/consumer-electronics/uphear-microphone.html</nowiki> (accessed Nov. 11, 2020).</ref>. Another example is the Schoeps Double MS Plugin, which can encode specific microphone setups. | ||

=== Binaural microphones === | === Binaural microphones === | ||

An easy way to capture a spatial aural representation is to use the previously mentioned HRTF (see | An easy way to capture a spatial aural representation is to use the previously mentioned HRTF (see [[#Human sound perception]]). Two microphones are placed inside the ears of a replica of the human head to simulate the HRTF. The time response and the related frequency response of the received stereo signal contain the specific HRTF information and the brain can decode it when the stereo signal is listened to over headphones. Typical systems are Neumann KU100, Davinci Head Mk2, Sennheiser MKE2002, and Kemar Head and Torso. Because every human has a very individual HRTF, this technique only works when the HRTF recorded by the binaural microphone is similar to the HRTF of the person listening to the recording. Moreover, most problematic in the context of XR applications is the fact that the recording is static, which means that the position of the listener cannot be changed afterwards. This makes binaural microphones incompatible with most XR cases. To solve this problem, binaural recordings in different directions are recorded and mixed afterwards depending on the user position in the XR environment. As this technique is complex and costly, it is not used so frequently anymore. Examples of such systems are the 3Dio Omni Binaural Microphone and the Hear360 8Ball. Even though HRTF-based recording techniques for XR are mostly outdated, the HRTF-based approach is very important in audio rendering for headsets (see [[#Binaural rendering]]). | ||

=== Ambisonic microphones === | === Ambisonic microphones === | ||

Ambisonics describes a sound field by spherical harmonic modes. Unlike the previously mentioned capture techniques, the recorded channels cannot be connected directly to a specific loudspeaker setup, like stereo or surround sound. Instead, it describes the complete sound field in terms of one monopole and several dipoles. In higher-order Ambisonics (HOA), quadrupoles and more complex polar patterns are also derived from the spherical harmonic decomposition. | Ambisonics describes a sound field by spherical harmonic modes. Unlike the previously mentioned capture techniques, the recorded channels cannot be connected directly to a specific loudspeaker setup, like stereo or surround sound. Instead, it describes the complete sound field in terms of one monopole and several dipoles. In higher-order Ambisonics (HOA), quadrupoles and more complex polar patterns are also derived from the spherical harmonic decomposition. | ||

In general, Ambisonics signals need a decoder in order to produce a playback-compatible loudspeaker signal in dependence of the direction and distance of the speakers. A HOA-decoder with an appropriate multichannel speaker setup can give an accurate spatial representation of the sound field. Currently, there are many First Order Ambisonics (FOA) microphones like the Soundfield SPS200, Soundfield ST450, Core Sound TetraMic, Sennheiser Ambeo, Brahma Ambisonic, Røde NT-SF1, Audeze Planar Magnetic Microphone, and Oktava MK-4012. All FOA microphones use a tetrahedral arrangement of cardioid directivity microphones and record four channels (A-Format), which is encoded into the Ambisonics-Format (B-Format) afterwards. For more technical details on Ambisonics, see | In general, Ambisonics signals need a decoder in order to produce a playback-compatible loudspeaker signal in dependence of the direction and distance of the speakers. A HOA-decoder with an appropriate multichannel speaker setup can give an accurate spatial representation of the sound field. Currently, there are many First Order Ambisonics (FOA) microphones like the Soundfield SPS200, Soundfield ST450, Core Sound TetraMic, Sennheiser Ambeo, Brahma Ambisonic, Røde NT-SF1, Audeze Planar Magnetic Microphone, and Oktava MK-4012. All FOA microphones use a tetrahedral arrangement of cardioid directivity microphones and record four channels (A-Format), which is encoded into the Ambisonics-Format (B-Format) afterwards. For more technical details on Ambisonics, see <ref>Furness, R. K., “Ambisonics-an overview”, In ''Audio Engineering Society Conference: 8th International Conference: The Sound of Audio'', 1990.</ref>. | ||