Main Page

t

The scope of eXtended Reality

Paul Milgram defined the well-known Reality-Virtuality Continuum in 1994 [1]. It explains the transition between reality on the one hand, and a complete digital or computer-generated environment on the other hand. However, from a technology point of view, a new umbrella term has been introduced, named eXtended Reality (XR). It is the umbrella term used for Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR), as well as all future realities such technologies might bring. XR covers the full spectrum of real and virtual environments. In Figure 1, the Reality-Virtuality Continuum is extended by the new umbrella term. As seen in the figure, a less-known term is presented, called Augmented Virtuality. This term relates to an approach, where the reality, e.g. the user’s hand, appears in the virtual world, which is usually referred to as Mixed Reality.

Following the most common terminology, the three major scenarios of extended reality are defined as follows.

Starting from left-to-right, Augmented Reality (AR) consists in augmenting the perception of the real environment with virtual elements by mixing in real-time spatially-registered digital content with the real world [2]. Pokémon Go and Snapchat filters are commonplace examples of this kind of technology used with smartphones or tablets. AR is also widely used in the industry sector, where workers can wear AR glasses to get support during maintenance, or for training.

Augmented Virtuality (AV) consists in augmenting the perception of a virtual environment with real elements. These elements of the real world are generally captured in real-time and injected into the virtual environment. The capture of the user’s body that is injected into the virtual environment is a well-known example of AV aimed at improving the feeling of embodiment.

Virtual Reality (VR) applications use headsets to fully immerse users in a computer-simulated reality. These headsets generate realistic images and sounds, engaging two senses to create an interactive virtual world.

Mixed Reality (MR) includes both AR and AV. It blends real and virtual worlds to create complex environments, where physical and digital elements can interact in real-time. It is defined as a continuum between the real and the virtual environments but excludes both of them.

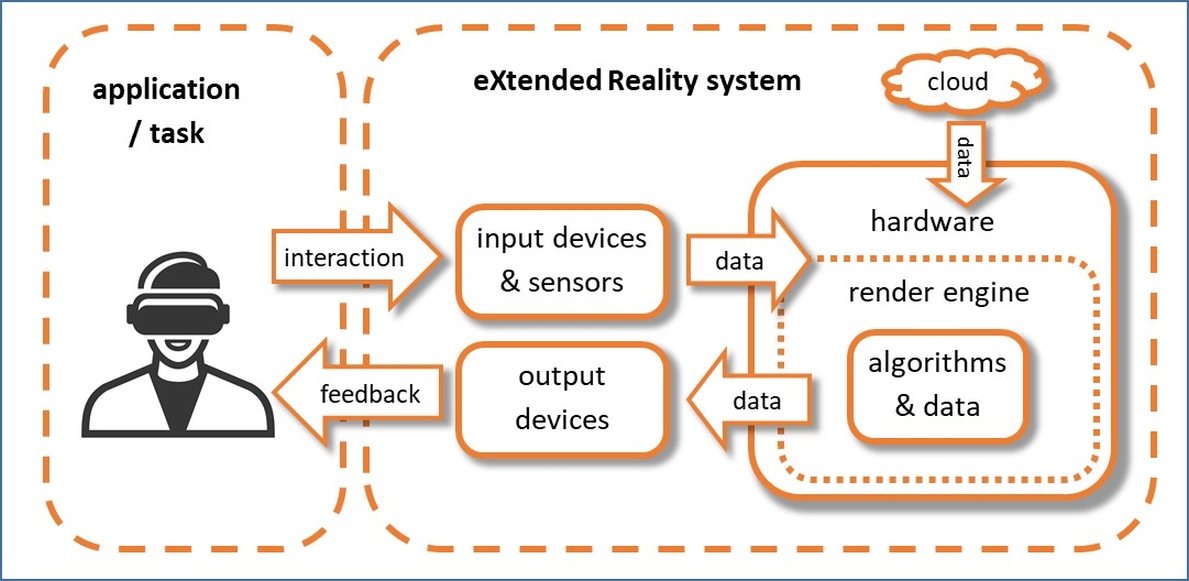

An important question to answer is how broad the term eXtented Reality (XR) spans across technologies and application domains. XR could be considered as a fusion of AR, AV, and VR technologies, but in fact it involves many more technology domains. The necessary domains range from sensing the world (such as image, video, sound, haptic), processing the data and rendering. Besides, hardware is involved to sense, capture, track, register, display, and to do many more things.

In Figure 2, a simplified schematic diagram of an eXtended Reality system is presented. On the left hand side, the user is performing a task by using an XR application. In section 5, a complete overview of all the relevant domains is given covering advertisement, cultural heritage, education and training, industry 4.0, health and medicine, security, journalism, social VR and tourism. The user interacts with the scene and his interaction is captured with a range of input devices and sensors, which can be visual, audio, motion, and many more (see sec 4.1 and 4.2.). The acquired data serves as input for the XR hardware where further necessary processing in the render engine is performed (see sec. 4.7). For example, the correct view point is rendered or the desired interaction with the scene is triggered. In sec. 4.3 and 4.4, an overview of the major algorithms and approaches is given. However, not only captured data is used in the render engine, but also additional data that comes from other sources such as edge cloud servers (see sec. 4.8) or 3D data available on the device itself. The rendered scene is then fed back to the user to allow him sensing the scene. This is achieved by various means such as XR headsets or other types of displays and other sensorial stimuli.

The complete set of technologies and applications will be described in the following chapters.

Notes

- ↑ [1] P. Milgram, H. Takemura, A. Utsumi, and F. Kishino, "Augmented Reality: A class of displays on the reality-virtuality continuum", Proc. SPIE vol. 2351, Telemanipulator and Telepresence Technologies, pp. 2351–34, 1994.

- ↑ [2] Ronald T. Azuma, “A Survey of Augmented Reality”, Presence: Teleoperators and Virtual Environments, vol. 6, issue 4, pp. 355-385, 199

Überschrift 1 (rot)

Überschrift 2 (blau)

Überschrift 3

Überschrift 3 (Aqua)

Farbcodes: [https://meta.wikimedia.org/wiki/Wiki_color_formatting_help]

Fort: [https://en.wikipedia.org/wiki/Help:Advanced_text_formatting]

MediaWiki wurde installiert.

Hilfe zur Benutzung und Konfiguration der Wiki-Software findest du im Benutzerhandbuch.